|

|

- Bio-Medical Imaging at Max-IV

Martin Bech / Lund University

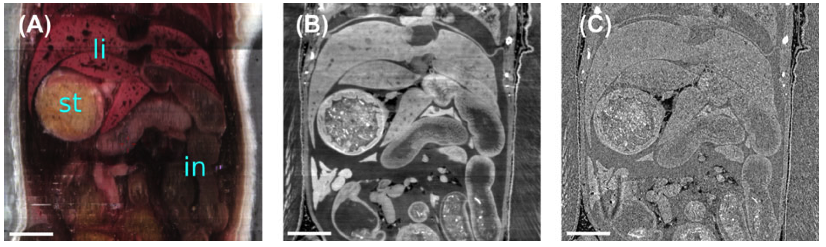

Phase-contrast x-ray imaging has recently been proven to give improved contrast in soft tissue samples. In particular with highly brilliant synchrotron radiation, different approaches with coherent x-rays can give very good contrast with resolution ranging from micrometers to a few tens of nanometers. This is an excellent tool for studying three-dimensional morphology in a non-destructive way.

In the northern part of Lund, the Max-IV synchrotron is under construction and will be ready for the first scientific experiments in 2016. But what kind of science can we do at Max-IV? One of the beamlines to be installed at Max-IV is the MedMax beamline for bio- and medical-imaging. I will discuss previous examples of biomedical synchrotron phase-contrast imaging experiments and the possibilities opening at Max-IV.

|

| Figure: Coronal views of mouse abdomen: (A) cryo-sliced image, and virtual cut through (B) phase-contrast tomography and (C) attenuation CT. The stomach(st), liver(li) and intestines(in) are labeled. The scale bar indicates 5 mm. (Tapfer, Bech et al. 2014 J. of Microscopy, 253(1) 24-30) |

- Measuring Radiometric Properties and the Appropriateness of Existing Analytic BRDF Models

Jannik Boll Nielsen / DTU Compute

We will address the need for parsimonious (i.e. good, low parameter) radiometric models, when measuring material reflectance properties. That is that we in essence only have few measurements of a material's radiometric properties in many practical applications, e.g. in visual quality control and reverse engineering.

- 2D Static Light Scattering for Dairy Based Applications

Jacob Lercke Skytte / DTU Compute

2D Static Light Scattering (2DSLS) is a novel hyperspectral (~450-1030 nm) optical technique, from which multiple light scattering phenomenas can be observed and related to the microstructural properties of an investigated sample. Furthermore, the technique is remote and non-invasive, which potentially makes it suitable for in-line process control applications. The talk provides an introduction to the 2DSLS technique, and covers the basics as well as ongoing work. Finally, a case study will be presented where 2DSLS is applied in relation to protein microstructures in stirred yogurt products.

- GPS/GNSS-based Positioning, Navigation and Timing - Status and Evolution

Anna B.O. Jensen / AJ Geomatics

GPS as a system for positioning, navigation and timing is today very well known for use with for instance smart phones, car navigation systems, and air plane navigation. But there are more global navigation satellite systems (GNSS) than the American GPS. The Russian GLONASS system which is operational, along with the development of the European Galileo and Chinese Beidou systems are causing rapid development in field of positioning and navigation applications. This presentation will present the current status of the satellite systems and the performance obtainable with user applications. Also, the evolution expected during the next few years will be reviewed with a focus on the European Galileo, and on the advantages that can be obtained by combining GPS with the other global navigation satellite systems.

- A Glimpse Through The Letterbox: Quality Inspection of Lyophilized Product in The Pharmaceutical Industry

Kartheeban Nagenthiraja / InnoScan A/S

Background: Lyophilization (Lyo) of parenteral drugs (freeze-drying) extends the shelf life of pharmaceutical products, hence the preservation process has gained application over the recent years. Lately, InnoScan was requested to develop a robust method to detect dark particles on the top of the lyophilized mass the so-called 'lyo cake'. The main challenge in this request was not detecting the dark particles, but rather avoiding false detection of acceptable product variations. Lyo cakes are typically shrunken, cracked and has multiple-sized crevices, which potentially could be detected as false error. Furthermore, the task was complicated by the design of the vial; a non-transparent cap covering the shoulder of the vial restricted the view of the cake surface. To not compromise the investment, a low false rejection rate was pivotal for the customer. Overall, the aim of the project was to develop a robust vision based technology to detect dark particles on the surface of lyo cakes, while maintaining an acceptable specificity.

Method: To facilitate image acquisition of the lyo cake we combined line-scan technology with the flexibility of rotating the vial. The challenge we faced as a consequence of the narrow acquisition window is similar to looking through a letterbox to describe the pattern of the mat. The letterbox provides a partial view of the mat; however if the mat could be rotated 360° incrementally and simultaneously acquiring images of the view; a complete summary of the mat can be composed. In general terms, we used this approach to imaging the lyo cake surface, and the surface image was subsequently analysed by an algorithm to detect particles. Differentiating between dark particles and crevices in the cake is the challenging task for the detection algorithm. Applying collimated light to the surface gives a distinct reflection pattern depending on the target; crevices or particle. Using the rotation, reflection patterns from the objects, can be observed at multiple angles and by autocorrelation the particle can be detected, due to uniform reflection pattern despite angle of view. The detection technique was tested using 100 vials of which 20 of them contained a dark particle at the surface. The performance of developed technology was compared with manual inspection carried out by trained personnel.

Results: The implemented technology executes well in test and demonstrates a better performance than manual inspection, which is the 'golden' standard prescribed by the regulatory authorities. Specifically, the automated technology was superior in differentiating between micro crevices and dark particles. Furthermore, the line handling speed of the machine corresponding to 350 vials/minute, substitutes manual work force of 87.5 persons.

Discussion: To comply with regulatory demands an automated inspection must show equal or better DR in comparison to manual baseline. Our automated technique to detect dark particles on lyo cakes demonstrated better performance than manual inspection and will been implemented at the customer's production site.

- Vision Systems for Glassworks

Jørgen Læssøe / JLI Vision A/S

Glassworks are mass producing factories. On some production lines, the speed exceeds 10 parts pr. second. Therefore, automatic inspection is essential.

Inspection is traditionally done in the cold end, but can also be applied in the hot end, just after the glass forming. This presents quite a few challenges. The environmental protection requires a lot of engineering to keep away the heat, and to protect the optical parts from oil contamination.

Inspecting in the hot end means that the machine operators get instant feedback when one of the many tools in the forming process drifts. Without hot end inspection you have to wait for up to one hour before the cold end equipment reports the problems.

The vision systems must operate at a high speed and find details smaller than 0.05 mm2. As the glass is red hot and soft it is not possible to do any handling, and the vision system must work well without alignment of the tableware or containers.

Inspecting tableware requires many backlighting patterns to reveal the different optical defects. To achieve this, a dynamic light box is used.

The presentation will discuss the design, programming and installation of these vision systems in the harsh glassworks environment.

- Finite Element Modeling of Micro Scale Surface Phenomena

Mary Kathryn Thompson / DTU Mechanics

Micro scale surface phenomena can have a substantial impact on the macro scale behavior of engineering systems. Surface metrology data can be used to construct finite element models with real surface roughness. These models can then be used to predict the system behavior and to design functional surfaces. However, the characterization and validation of these models remains a challenge. This presentation provides an overview of finite element surface modeling. It presents two case studies related to fluid sealing and thermal contact resistance. It discusses the relationship between mechanical design and image processing in this context. Finally, it outlines the challenges and opportunities for using statistical and image processing techniques to analyze and compare the results of FE surface models.

- Vision for robotics - Why estimating pose uncertainty is important

Henrik Gordon Petersen / The Maersk Mc-Kinney Moller Institute

In this talk, examples of the robotic automation challenges in the current project CARMEN ("Center for Advanced Robotic Manufacturing ENgineering") funded by The Danish Council for Strategic Research and in the SPIR-project "MADE - Platform for Future Production". The emphasis will be on examples from assembling parts that initially are randomly located. The assembly can be achieved either by specialized feeders that take the parts from random locations to a well-defined aligned location or by using vision for bin- or belt-picking. In either way, the parts will end at locations which are known up to some pose uncertainty. It will be discussed how these uncertainties are important for the robustness of the execution of the assembly tasks and why it (at least for the future) will be important to have reliable estimates of the 6D pose uncertainty probability distribution.

- From Research to Industrial Robot Vision

Michael Nielsen / TI Odense

This talk will address the contrasts and challenges between the two worlds of research and industrial robot vision. It is a clash of cultures so to speak with the usual prejudice to overcome. The challenges and processes are different in all stages of the projects. A specialist, which computer vision engineers usually are, need to acquire more general skills and understand the systems that the vision system has to be integrated with. Robot Vision typically consists of two parts; vision for guiding the robot and vision for quality inspection to see what happened after the robot did its work. During the talk examples of both will be presented.

- LED spectral imaging

Jens Michael Carstensen / DTU Compute and Videometer A/S

Spectral imaging is a very versatile technique for food, pharma and agri product quality assessment. It may simultaneously measure a broad range of food safety and food quality parameters, and this is done rapidly, non-destructively, and without physical contact to the sample. Alternatives are typically time-consuming, labor-intensive, or require highly trained sensory panels.

LED spectral imaging uses narrow band LEDs to provide the spectral resolution on a high-resolution monochrome camera sensor by strobing different LEDs into an integrating sphere before the light is diffusely illuminating the sample in a very homogenous way. Compared to hyperspectral imaging, LED spectral imaging does not need movement of the sample, and it generally provides higher spatial resolution and higher speed at the cost of high spectral resolution. One important advantage of LED spectral imaging is that the dynamic range may be optimized for each wavelength simply be adjusting the strobe intensity and/or the strobe length. Another advantage is that it is not dependent on a broad band incandescent light source, which will typically be subject to stability issues.

|