Towards Event-driven Animation

Alan Watt, University of Sheffield

After a brief look at the past and present, the talk will examine the future of real-time animation by looking at two of the major challenges of event-driven animation: character animation and facial animation for visual speech. High quality character animation, currently supplied by very expensive and inconvenient MOCAP technology, or equally inconvenient forward kinematics, is a demand of many future and existing applications. We would like to be able to synthesize motion for both these applications in real-time. High quality visual speech could pave the way towards a more natural human-computer interaction and would be the foundation technology in such an ‘anthropomorphic interface’. Both these topics are partially solved in real-time as event driven animation, but we still rely heavily on the pre-record (or pre-design) and playback in real time model. However, pre-recorded motion can be retargeted in real-time and this is one way of retaining the quality of MOCAP while diminishing its major drawback.

As well as examining the field in general I will describe research that we are carrying out in these areas.

Examples I will be describing in some detail

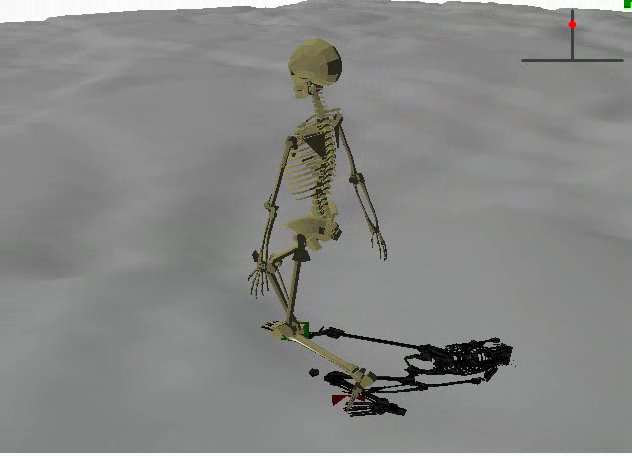

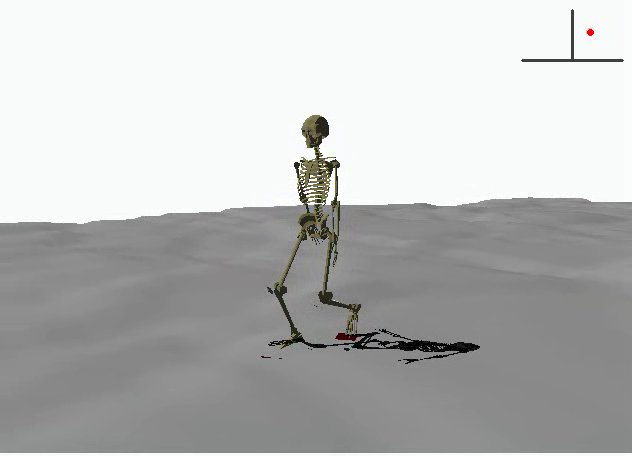

Two frames for a real-time inverse kinematics with spatial constraints solution for a character walking across uneven terrain.

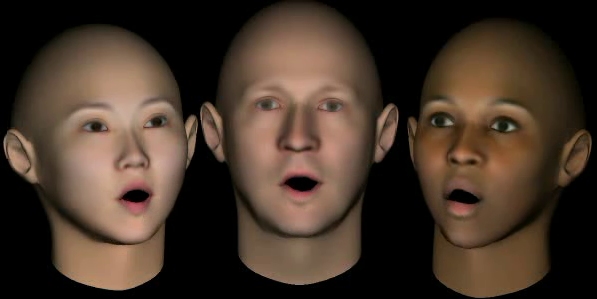

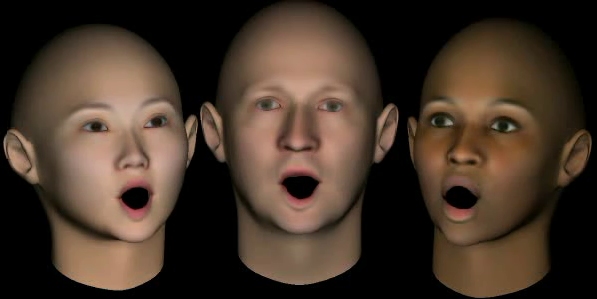

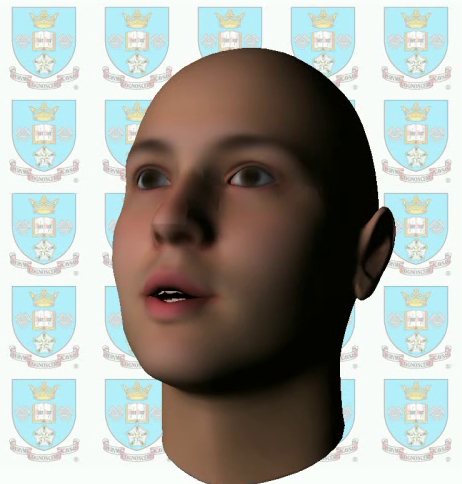

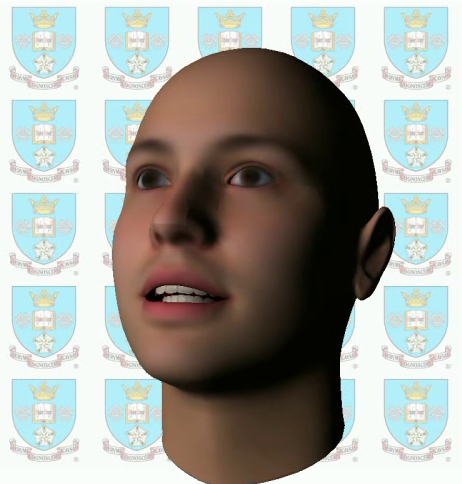

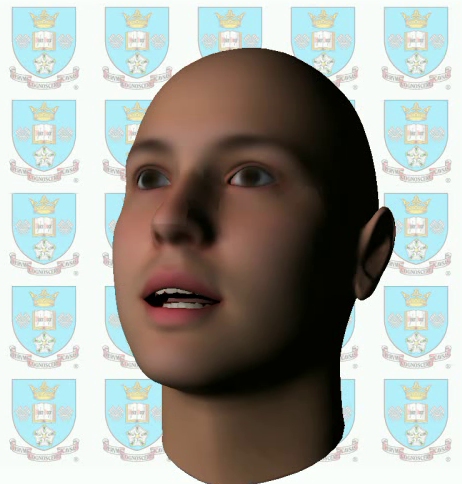

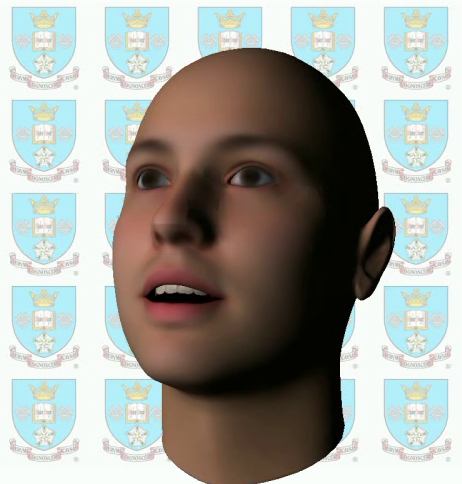

In visual speech an event driven approach is critical except in applications (games perhaps) where a limited vocabulary of sentences pertains. We can still use arbitrary text to drive a head where the basic motion is MOCAP. Also we can adapt the motion (retargeting) onto different facial meshes.

In this example we use (non-physical) ‘bones to distort the mesh in conjunction with interpolation using radial basis functions.

3D morphing using Principal Component Analysis + spatial constraints to achieve high quality visual speech.